Artículo

Personalizing AI art boosts credit, not beauty

Revista: Technology in Society, 2026

Autores: Maryam Ali Khan, Elzé Siguté Mikalonyté, Sebastian Porsdam Mann, Peng Liu, Yueying Chu, Mario Attie-Picker, Mey Bahar Buyukbabani, Julian Savulescu, Ivar Rodríguez Hannikainen y Brian D. Earp

DOI: https://doi.org/10.1016/j.techsoc.2025.103055

Artículo

Is Consent-GPT valid? Public attitudes to generative AI use in surgical consent

Revista: AI & Society, 2025

Autores: Jemima Winifred Allen, Ivar Rodríguez Hannikainen, Julian Savulescu, Dominic Wilkinson y Brian David Earp

DOI: https://doi.org/10.1007/s00146-025-02644-9

Artículo

The Geometry of Language: Understanding LLMs in Bioethics

Revista: Journal of Bioethical Inquiry, 2025

Autores: Aníbal M. Astobiza

DOI: https://doi.org/10.1007/s11673-025-10480-1

Artículo

The role of LLMs in theory building

Revista: Social Sciences & Humanities Open, 2025

Autores: Aníbal M. Astobiza

DOI: https://doi.org/10.1016/j.ssaho.2025.101617

Artículo

The Ethical Paradox of Automation: AI Moral Status as a Challenge to the End of Human Work

Revista: Topoi, 2025

Autores: Joan Llorca Albareda

DOI: https://doi.org/10.1007/s11245-025-10250-z

Artículo

Percepciones profesionales sobre confianza en inteligencia artificial en España

Revista: Revista Bioética, 2025

Autores: Aníbal M. Astobiza, Ramón Ortega Lozano y Marcos Alonso

DOI: https://doi.org/10.1590/1983-803420253915ES

Artículo

AI-mediated healthcare and trust. A trust-construct and trust-factor framework for empirical research

Revista: Artificial Intelligence Review, 2025

Autores: Marcos Alonso, Aníbal M. Astobiza y Ramón Ortega Lozano

DOI: https://doi.org/10.1007/s10462-025-11306-7

Artículo

Trusting the (un)trustworthy? A new conceptual approach to the ethics of social care robots

Revista: AI & Society, 2025

Autores: Joan Llorca Albareda, Belén Liedo y María Victoria Martínez López

DOI: https://doi.org/10.1007/s00146-025-02274-1

Artículo

Why dignity is a troubling concept for AI ethics

Revista: Patterns, 2025

Autores: Jon Rueda, Txetxu Ausín, Mark Coeckelbergh, Juan Ignacio del Valle, Francisco Lara, Belén Liedo, Joan Llorca Albareda, Heidi Mertes, Robert Ranisch, Vera Lúcia Raposo, Bernd C. Stahl, Murilo Vilaça e Íñigo de Miguel

DOI: https://doi.org/10.1016/j.patter.2025.101207

Capítulo

Inteligencia artificial en la tecnología de la realidad virtual. Aspectos metafísicos, éticos y políticos

Libro: Biotecnoética: Biotecnología, Inteligencia Artificial, Ética. Editora CRV, 2025

Autores: Aníbal M. Astobiza, Txetxu Ausín y Daniel López Castro

DOI: http://10.24824/978652517531.7

Capítulo

La ética de la automatización del trabajo humano en la era de la inteligencia artificial

Libro: Biotecnoética: Biotecnología, Inteligencia Artificial, Ética. Editora CRV, 2025

Autores: Joan Llorca Albareda

DOI: http://10.24824/978652517531.7

Capítulo

Why Sex Robots Should Fear Us

Libro: Governing the Future, 2025

Autores: Nicholas Agar y Pablo García Barranquero

DOI: https://doi.org/10.1201/9781003226406

Artículo

Uncovering the gap: challenging the agential nature of AI responsibility problems

Revista: AI and Ethics, 2025

Autores: Joan Llorca Albareda

DOI: https://doi.org/10.1007/s43681-025-00685-w

Artículo

Who Achieves What? The Subjective Dimension of the Objective Goods of Life Extension in the Ethics of Digital Doppelgängers

Revista: American Journal of Bioethics, 2025

Autores: Joan Llorca Albareda, Gonzalo Díaz Cobacho y Pablo García Barranquero

DOI: https://doi.org/10.1080/15265161.2024.2441707

Artículo

Does Momentary Outcome-Based Reflection Shape Bioethical Views? A Pre-Post Intervention Design

Revista: Cognitive Science, 2024

Autores: Carme Isern-Mas, Piotr Bystranowski, Jon Rueda e Ivar R. Hannikainen

DOI: https://doi.org/10.1111/cogs.70009

Artículo

Credit and blame for AI-generated content: Effects of personalization in four countries

Revista: Annals of the New York Academy of Sciences, 2024

Autores: Brian D. Earp, Sebastian Porsdam Mann, Peng Liu, Ivar R. Hannikainen, Maryam Ali Khan, Yueying Chu y Julian Savulescu

DOI: https://doi.org/10.1111/nyas.15258

Artículo

Repensando la antropología digital: estructurismo, mediación tecnológica e implicaciones epistémicas y morales

Revista: Enrahonar, 2024

Autores: Raúl Linares-Peralta y Joan Llorca Albareda

DOI: 10.5565/rev/enrahonar.1577

Artículo

Affordable Pricing of CRISPR Treatments is a Pressing Ethical Imperative

Revista: The CRISPR Journal, 2024

Autores: Jon Rueda, Íñigo de Miguel Beriain y Lluis Montoliu

DOI: https://doi.org/10.1089/crispr.2024.0042

Artículo

Legal Provisions on Medical Aid in Dying Encode Moral Intuition

Revista: Proceedings of the National Academy of Sciences, 2024

Autores: Ivar R. Hannikainen, Jorge Suárez, Luis Espericueta, Maite Menéndez-Ferreras y David Rodríguez-Arias

DOI: https://www.pnas.org/doi/10.1073/pnas.2406823121

Artículo

An ageless body does not imply transhumanism: A reply to Levin

Revista: Theoretical Medicine and Bioethics, 2024

Autores: Pablo García Barranquero y Joan Llorca Albareda

DOI: https://doi.org/10.1007/s11017-024-09685-z

Artículo

Gradualismo vs singularidad en la interpretación de riesgos asociados con la inteligencia artificial general

Revista: Revista de la Sociedad de Lógica, Metodología y Filosofía de la Ciencia en España, 2024

Autores: Miguel Moreno Muñoz

URL: https://solofici.org/revistas/

Artículo

Ethics and responsibility in biohybrd robotics research

Revista: Proceedings of the National Academy of Sciences, 2024

Autores: Rafael Mestre, Aníbal M. Astobiza, Victoria A. Webster-Wood, Matt Ryan y M. Taher A. Saif

DOI: https://doi.org/10.1073/pnas.2310458121

Artículo

Deepfakes, desinformación, discursos de odio y democracia en la era de la Inteligencia Artificial

Revista: Cuadernos del audiovisual, CAA, 2024

Autores: Aníbal M. Astobiza

DOI: https://doi.org/10.62269/cavcaa.20

Artículo

De la biomejora moral a la IA para la mejora moral: asistentes morales artificiales en la era de los riesgos globales

Revista: Enrahonar, 2024

Autores: Pablo Neira Castro

DOI: https://doi.org/10.5565/rev/enrahonar.1522

Artículo

Value change, reprogenetic technologies, and the axiological underpinnings of reproductive choice

Revista: Bioethics, 2024

Autores: Jon Rueda

DOI: https://doi.org/10.1111/bioe.13287

Artículo

Old by obsolescence: The paradox of aging in the digital era

Revista: Bioethics, 2024

Autores: Joan Llorca Albareda y Pablo García-Barranquero

DOI: https://doi.org/10.1111/bioe.13288

Libro

Ethics of Artificial Intelligence

Editorial: Springer Nature, 2024

Editores: Francisco Lara y Jan Deckers

DOI: https://doi.org/10.1007/978-3-031-48135-2

Capítulo

Ethics of Virtual Assistants

Libro: Ethics of Artificial Intelligence, 2024

Autores: Juan Ignacio del Valle, Joan Llorca Albareda y Jon Rueda

DOI: https://doi.org/10.1007/978-3-031-48135-2_5

Capítulo

The Singularity, Superintelligent Machines, and Mind Uploading: The Technological Future?

Libro: Ethics of Artificial Intelligence, 2024

Autores: Antonio Diéguez y Pablo García-Barranquero

DOI: https://doi.org/10.1007/978-3-031-48135-2_12

Capítulo

Ethics of Autonomous Weapon Systems

Libro: Ethics of Artificial Intelligence, 2024

Autores: Juan Ignacio del Valle y Miguel Moreno

DOI: https://doi.org/10.1007/978-3-031-48135-2_9

Capítulo

The moral status of AI entities

Libro: Ethics of Artificial Intelligence, 2024

Autores: Joan Llorca Albareda, Paloma García y Francisco Lara

DOI: https://doi.org/10.1007/978-3-031-48135-2_4

Capítulo

Exploring the Ethics of Interaction with Care Robots

Libro: Ethics of Artificial Intelligence, 2024

Autores: María Victoria Martínez-López, Gonzalo Díaz-Cobacho, Aníbal M. Astobiza y Blanca Rodríguez

DOI: https://doi.org/10.1007/978-3-031-48135-2_8

Capítulo

AI, Sustainability, and Environmental Ethics

Libro: Ethics of Artificial Intelligence, 2024

Autores: Cristian Moyano-Fernández y Jon Rueda

DOI: https://doi.org/10.1007/978-3-031-48135-2_11

Artículo

Liberal eugenics, coercion and social pressure

Revista: Enrahonar, 2024

Autores: Blanca Rodríguez

DOI: https://doi.org/10.5565/rev/enrahonar.1520

Comentario

The brain death criterion in light of value-based disagreement versus biomedical uncertainty

Revista: The American Journal of Bioethics, 2024

Autores: Daniel Martin, Gonzalo Díaz-Cobacho e Ivar R. Hannikainen

DOI: https://doi.org/10.1080/15265161.2023.2278566

Artículo

May Artificial Intelligence take health and sustainability on a honeymoon? Towards green technologies for multidimensional health and environmental justice

Revista: Global Bioethics, 2024

Autores: Cristian Moyano-Fernández, Jon Rueda, Janet Delgado y Txetxu Ausín

DOI: https://doi.org/10.1080/11287462.2024.2322208

Artículo

The global governance of genetic enhancement technologies: Justification, proposals, and challenges

Revista: Enrahonar, 2024

Autores: Jon Rueda

DOI: https://doi.org/10.5565/rev/enrahonar.1519

Artículo

Anticipatory gaps challenge the public governance of heritable human genome editing

Revista: Journal of Medical Ethics, 2024

Autores: Jon Rueda, Seppe Segers, Jeroen Hopster, Karolina Kudlek, Belén Liedo, Samuela Marchiori y John Danaher

DOI: doi: 10.1136/jme-2023-109801

Artículo

Strong bipartisan support for controlled psilocybin use as treatment or enhancement in a representative sample of US Americans: need for caution in public policy persists

Revista: AJOB neuroscience, 2024

Autores: Julian D. Sandbrink, Kyle Johnson, Maureen Gill, David B. Yaden, Julian Savulescu, Ivar R. Hannikainen y Brian D. Earp

DOI: https://doi.org/10.1080/21507740.2024.2303154

Artículo

Advance Medical Decision-Making Differs Across First-and Third-Person Perspectives

Revista: AJOB Empirical Bioethics, 2024

Autores: James Toomey, Jonathan Lewis, Ivar R. Hannikainen y Brian D. Earp

DOI: https://doi.org/10.1080/23294515.2024.2336900

Artículo

Human stem-cell-derived embryo models: When bioethical normativity meets biological ontology

Revista: Developmental Biology, 2024

Autores: Adrian Villalba, Jon Rueda e Íñigo de Miguel Beriain

DOI: https://doi.org/10.1016/j.ydbio.2024.01.009

Respuesta

Is ageing still undesirable? A reply to Räsänen

Revista: Journal of Medical Ethics, 2024

Autores: Pablo García-Barranquero, Joan Llorca Albareda y Gonzalo Díaz-Cobacho

DOI: https://doi.org/10.1136/jme-2023-109607

Artículo

Socratic nudges, virtual moral assistants and the problem of autonomy

Revista: AI & SOCIETY, 2024

Autores: Francisco Lara y Blanca Rodríguez

DOI: https://doi.org/10.1007/s00146-023-01846-3

Artículo

Anthropological crisis or crisis in moral status: a philosophy of technology approach to the moral consideration of artificial intelligence

Revista: Philosophy & Technology, 2024

Autores: Joan Llorca Albareda

DOI: https://doi.org/10.1007/s13347-023-00682-z

Artículo

Introducing Complexity in Anthropology and Moral Status: a Reply to Pezzano

Revista: Philosophy & Technology, 2024

Autores: Joan Llorca Albareda

DOI: https://doi.org/10.1007/s13347-024-00709-z

Artículo

Re-defining the human embryo: A legal perspective on the creation of embryos in research

Revista: EMBO reports, 2024

Autores: Iñigo De Miguel Beriain, Jon Rueda y Adrian Villalba

DOI: https://doi.org/10.1038/s44319-023-00034-0

Artículo

Pluralism in the determination of death

Revista: Current Opinion in Behavioral Sciences, 2024

Autores: Gonzalo Díaz-Cobacho y Alberto Molina-Pérez

DOI: https://doi.org/10.1016/j.cobeha.2024.101373

Artículo

From neurorights to neuroduties: the case of personal identity

Revista: Bioethics Open Research, 2024

Autores: Aníbal Monasterio Astobiza e íñigo de Miguel Beriain

DOI: https://doi.org/10.12688/bioethopenres.17501.1

Artículo

Is ageing undesirable? An ethical analysis

Revista: Journal of Medical Ethics, 2024

Autores: Pablo García-Barranquero, Joan Llorca Albareda y Gonzalo Díaz-Cobacho

DOI: http://dx.doi.org/10.1136/jme-2022-108823

Artículo

Public Preferences for Digital Health Data Sharing: Discrete Choice Experiment Study in 12 European Countries

Revista: Journal of Medical Internet Research, 2023

Autores: Roberta Biasiotto, Jennifer Viberg Johansson, Melaku Birhanu Alemu, Virginia Romano, Heidi Beate Bentzen, Jane Kaye, … Aníbal M. Astobiza, … Deborah Mascalzoni.

DOI: https://www.jmir.org/2023/1/e47066

Artículo

AI-powered recommender systems and the preservation of personal autonomy

Revista: AI & Society, 2023

Autores: Juan Ignacio del Valle y Francisco Lara

DOI: https://doi.org/10.1007/s00146-023-01720-2

Artículo

Rebutting the Ethical Considerations regarding Consciousness in Human Cerebral Organoids: Challenging the Premature Assumptions

Revista: AJOB neuroscience, 2023

Autores: Aníbal Monasterio Astobiza

DOI: https://doi.org/10.1080/21507740.2023.2188305

Artículo

El estatus moral de las entidades de inteligencia artificial

Revista: Disputatio, 2023

Autores: Joan Llorca Albareda

DOI: https://doi.org/10.5281/zenodo.8140967

Artículo

Artificial moral experts: asking for ethical advice to artificial intelligent assistants

Revista: AI & Ethics, 2023

Autores: Blanca Rodríguez y Jon Rueda

DOI: https://doi.org/10.1007/s43681-022-00246-5

Artículo

Do people believe that machines have minds and free will? Empirical evidence on mind perception and autonomy in machines

Revista: AI & Ethics, 2023

Autores: Aníbal Monasterio Astobiza

DOI: https://doi.org/10.1007/s43681-023-00317-1

Artículo

Neurorehabilitation of Offenders, Consent and Consequentialist Ethics

Revista: Neuroethics, 2023

Autores: Francisco Lara

DOI: https://doi.org/10.1007/s12152-022-09510-1

Artículo

Divide and Rule? Why Ethical Proliferation is not so Wrong for Technology Ethics

Revista: Philosophy & Technology, 2023

Autores: Joan Llorca Albareda y Jon Rueda

DOI: https://doi.org/10.1007/s13347-023-00609-8

Artículo

The morally disruptive future of reprogenetic enhancement technologies

Revista: Trends in Biotechnology, 2023

Autores: Jon Rueda, Jonathan Pugh y Julian Savulescu

DOI: https://doi.org/10.1016/j.tibtech.2022.10.007

Artículo

Contesting the Consciousness Criterion: A more radical approach to the moral status of nonhumans

Revista: AJOB Neuroscience, 2023

Autores: Joan Llorca Albareda y Gonzalo Díaz Cobacho

DOI: https://doi.org/10.1080/21507740.2023.2188280

Artículo

Innocence over utilitarianism: Heightened moral standards for robots in rescue dilemmas

Revista: European Journal of Social Psychology, 2023

Autores: Jukka Sundvall, Marianna Drosinou, Ivar R. Hannikainen et al.

DOI: https://doi.org/10.1002/ejsp.2936

Artículo

Why a Virtual Assistant for Moral Enhancement When We Could have a Socrates?

Revista: Science and Engineering Ethics, 2021

Autores: Francisco Lara

DOI: https://doi.org/10.1007/s11948-021-00318-5

Artículo

Inteligencia Artificial para el bien común (AI4SG): IA y los Objetivos de Desarrollo Sostenible

Revista: Arbor: Ciencia, Pensamiento y Cultura, 2021

Autores: Aníbal Monasterio Astobiza

DOI: https://doi.org/10.3989/arbor.2021.802007

Artículo

AI Ethics for Sustainable Development Goals

Revista: IEEE Technology and Society Magazine, 2021

Autores: Aníbal Monasterio Astobiza, Mario Toboso, Manuel Aparicio y Daniel López

DOI: https://ieeexplore.ieee.org/document/9445792

Artículo

Science, misinformation and digital technology during the Covid-19 pandemic

Revista: History and Philosophy of the Life Sciences, 2021

Autores: Aníbal Monasterio Astobiza

DOI: https://link.springer.com/article/10.1007/s40656-021-00424-4

XI Congreso de la Sociedad Española de Filosofía Analítica. Universidad de Sevilla. 1-3/10/2025

AI as ideal observer and support for morally-sensitive clinical decisions – Robert Gamboa Dennis.

Confianza de los profesionales sanitarios españoles en la inteligencia artificial – Aníbal M. Astobiza, Ramón Ortega Lozano, Marcos Alonso.

XI Congreso en Comunicación Política y Estrategias de Campaña: El gobierno de la inteligencia artificial. Universidad de Granada. 24-26/09/2025

Gobernanza deliberativa aumentada: grandes modelos de lenguaje (GMLs) e IA agéntica en procesos democráticos – Aníbal M. Astobiza.

III Seminario Iberoamericano de Ética Tecnológica (3SIETEC). Universidad Nacional Autónoma de México. 29-30/07/2025

Autonomía humana y sistemas de recomendación – Francisco Lara.

AI agents for health. Agency and autonomy in AI-driven healthcare – Aníbal M. Astobiza, Ramón Ortega, Marcos Alonso, Jorge Linares.

Manipulación algorítmica y autonomía humana, perspectivas éticas sobre sus riesgos, problemas y ¡beneficios? – Pablo Neira Castro.

Componentes epistémicos y pragmáticos de la interacción entre humanos y modelos de lenguaje – Miguel Moreno.

Autoría e IA: una propuesta híbrida – Joan Llorca Albareda, Jose Sánchez Benavente.

Los usos delictivos de la IA – Jorge Linares.

Exploración teórica de un asistente virtual socrático en el consultorio médico – Robert Gamboa Dennis.

XXII Semana de Ética y Filosofía Política (AEEFP): Disrupciones, crisis climática y nuevas tecnologías. Universidad de Granada. 29-31/01/2025

Una aproximación ética a los efectos de la IA en la autonomía humana – Pablo Neira Castro.

Autonomía y control en sistemas inteligentes. Implicaciones éticas – Miguel Moreno Muñoz.

Progreso tecno-moral – Jon Rueda.

Confianza, IA y contexto sanitario. Problemas conceptuales y éticos – Marcos Alonso, Ramón Ortega Lozano, Aníbal M. Astobiza.

Ética de la IA en salud. Hacia un marco conceptual propio – Robert Gamboa Dennis.

XII Jornadas Internacionales de Filosofía del Derecho: La Filosofía Jurídica en la Era del Neuroderecho. Universidad Nacional Autónoma de México. 19-22/11/2024

Ética de la IA, Neuroética y Neuroderechos. Una Alianza Transdisciplinar – Aníbal M. Astobiza.

7th AAAI/ACM Conference on AI, Ethics and Society. San José Convention Center (California). 21-23/10/2024

Uncovering the gap: challenging the agential nature of AI responsibility problems – Joan Llorca Albareda.

Workshop on New Technologies: Current and Future Political, Ethical, and Social Issues. Fiocruz, online. 17/10/2024

Dignity and AI Ethics – Jon Rueda.

Presentación del número especial de la revista Enrahonar. Universidad Complutense de Madrid. 10/10/2024

Mesa redonda: un giro político en el debate sobre la mejora genética – Jon Rueda, Francisco Javier Rodríguez-Alcázar, Lilian Bermejo-Luque, Marcos Alonso, Blanca Rodríguez López, Lydia Feito, Pablo Neira Castro.

4TU.Ethics/ESDiT Conference on Philosophy and Ethics of Technology. University of Twente. 2-4/10/2024

Techno-Moral Progress: Exploring the Technological Mediation of Better Morality – Jon Rueda.

Reproductive Autonomy in the Age of Artificial Intelligence – Jon Rueda.

Uncovering the gap: challenging the agential nature of AI responsibility problems – Joan Llorca Albareda.

Philosophy, Engineering, and Technology fPET 2024. Karlsruhe Institute of Technology. 17-20/09/2024

Symposium organization: Interdisciplinary Speculations for the Future of Biohybrid Robots – Rafael Mestre, Ned Barker, Sergey Astakhov, Aníbal M. Astobiza, Maria Guix, Joana Burd, Matt Ryan.

Techno-Moral Progress: Exploring the Technological Mediation of Better Morality – Jon Rueda.

Reproductive Autonomy in the Age of Artificial Intelligence – Jon Rueda.

Uncovering the gap: challenging the agential nature of AI responsibility problems – Joan Llorca Albareda.

The ethical paradox of automation: AI moral status as a challenge to the end of human work – Joan Llorca Albareda.

XI Congreso de la Sociedad de Lógica, Metodología y Filosofía de la Ciencia en España. Universidad de Oviedo. 16-19/07/2024

On the role of representation (and modeling) in neuroscience and AI: Insights from Mauricio Suarez’s inferential conception – Aníbal M. Astobiza.

Canadian Society for the History and Philosophy of Science. Université du Québec à Montréal. 19-23/06/2024

Old by obsolescence: The paradox of aging in the digital era – Pablo García Barranquero, Joan Llorca Albareda.

International Congress on Disaster Ethics. Universidad de Oviedo. 20-22/06/2024

Doomsday genetics: Rethinking worst-case scenarios in the ethics of genetic technologies – Jon Rueda.

17th World Congress of Bioethics. Hamad Bin Khalifa University (Qatar). 6-10/06/2024

Old by obsolescence: The paradox of aging in the digital era – Pablo García Barranquero, Joan Llorca Albareda.

FARUPEIB VIII Jornadas Anuales. FARUPEIB-España. 30/05/2024

Ética, paciente y salud: ¿cómo encajar humanismo y tecnología? – Aníbal M. Astobiza.

International Conference Existential Threats to Humanity: What are they and how to Adress them. The Center for the Study of Bioethics, The Hastings Center and The Oxford Uehiro Centre for Practical Ethics. Budka, Montenegro. 30-31/05/2024

Doomsday genetics: Disasters ethics for (post)human genome editing – Jon Rueda.

II Seminario Iberoamericano de Ética Tecnológica. Universidad de Granada. 22-24/05/2024

Old by obsolescence: the paradox of aging in the digital era – Pablo García Barranquero, Joan Llorca Albareda.

Uncovering the gap: challenging the agential nature of AI responsibility problems – Joan Llorca Albareda.

Robots profesores y autonomía – Paloma García Díaz.

Benchmarks to Evaluate High Levels of Autonomy in AI Systems – Miguel Moreno Muñoz.

Realidad virtual y autonomía – Blanca Rodríguez López.

Autonomía personal y sistemas opacos de decisión algorítmica – Francisco Lara.

Beyond Responsible AI Principles: embedding ethics in the systems lifecycle – Juan Ignacio del Valle.

Does explainability matter? Assesing the explainability-accuracy trade-off in attitudes toward medical AI – Paolo Buttazzoni, Ivar R. Hannikainen.

IA y cognición moral: investigando el desarrrollo moral con Grandes Modelos Linguísticos – Aníbal M. Astobiza, Ramón Ortega, Rafael Mestre.

Inteligencia Artificial, Traducción y Ética: una aproximación – Mar Díaz-Millón, Gonzalo Díaz-Cobacho.

Por qué la mejora moral interna podría ser políticamente peor que la mejora moral externa – Pablo Neira Castro.

Autonomía reproductiva en la era de la inteligencia artificial – Jon Rueda.

Cartografiando la ética de la robótica sexual – Luis Espericueta, Gonzalo Díaz-Cobacho.

L’envelliment: una perspectiva multidisciplinaria. Instituto Interuniversitario López Piñero (Valencia). 24/04/2024

No, no quiero hacerme mayor: preguntas y problemas en la filosofía del enevejecimiento (invitada) – Pablo García Barranquero.

VI Jornadas Novatores en Filosofía de la Ciencia y la Tecnología. Universidad de Salamanca. 10-11/04/2024

La dimensión agencial de la brecha de responsabilidad: equívocos y consecuencias teóricas – Joan Llorca Albareda.

II Congreso Ingeniería y Filosofía: Ingeniería del mal. Universidad de Oviedo. 14/03/2024

¿Dónde está la agencia en sistemas híbridos ser humano-máquina? – Aníbal M. Astobiza.

Ethics of Robotics Intelligence: Ethics and War Robots. Universidad Jaume I. 29/11/2023

Ethics of Bio-hybrid Systems: Objects, Tools, or Companions? – Aníbal M. Astobiza.

Jornadas Aspectos éticos y jurídicos de la medicina personalizada. Universidad de León. 28-29/09/2023

Impacto de la robótica asistencial sobre la confianza en entornos de cuidado (online) – Joan Llorca Albareda, Belén Liedo, María Victoria Martínez-López.

The International Society for the History, Philosophy and Social Studies of Biology. University of Toronto. 9-15/07/2023

No, we don’t want to grow old: Reasons why biological aging is undesirable – Pablo García Barranquero, Joan Llorca Albareda, Gonzalo Díaz Cobacho.

V Jornadas Novatores en Filosofía de la Ciencia y la Tecnología. Universidad de Salamanca. 26-27/04/2023

Razones por las que el envejecimiento (biológico) es indeseable – Pablo García Barranquero, Joan Llorca Albareda, Gonzalo Díaz Cobacho.

Philosophy, Engineering and Technology fPET 2023. Universidad de Delft, 19-21/04/2023

Reprogenetic technologies, future value change, and the axiological underpinnings of reproductive choice – Jon Rueda.

Biorobots as objects, tools or companions?: An ethical approach to understand bio-hybrid systems – Rafael Mestre & Aníbal Monasterio Astobiza.

VII Seminario de la Red Andaluza de Ética y Filosofía Política. Universidad de Córdoba. 31/03/2023

¿Es bueno envejecer? – Pablo García Barranquero, Joan Llorca Albareda, Gonzalo Díaz Cobacho.

XVI Congreso Internacional de Ética y Filosofía Política. Universidad Jaume I de Castellón, 2-4/02/2023

Sentido y límites de una ética de las máquinas – Francisco Lara.

Ética para avatares – Blanca Rodríguez López.

Estatus moral e inteligencia artificial: una panorámica de las problemáticas filosóficas de la consideración moral de la inteligencia artificial – Joan Llorca Albareda, Paloma García Díaz.

Ética, Trabajo e Inteligencia Artificial– Gonzalo Díaz-Cobacho, Joan Llorca Albareda.

VI Congreso Iberoamericano de Filosofía. Universidad de Oporto, 23-27/01/2023

La moralidad y la política de las cosas: la disolución político-moral del dualismo sujeto-objeto en las tecnologías de la inteligencia artificial – Joan Llorca Albareda.

Pluralismo en la determinación de la muerte, una posibilidad poco estudiada –Gonzalo Díaz-Cobacho.

Workshop on “Patient autonomy in the face of new technologies in medicine”. Uehiro Centre For Practical Ethics, Universidad de Oxford, 20/01/2023

Reprogenetic technologies, techno-moral changes, and the future of reproductive autonomy – Jon Rueda.

International Workshop “Logic, Philosophy and History of Medicine”. Universidad de Sevilla, 06-07/10/2022

No. We don´t want to get old – Pablo García-Barranquero, Joan Llorca Albareda, Gonzalo Díaz-Cobacho.

Arqus Research Forum “Artificial Intelligence and its applications”. Facultad de Ingenierías Informática y de Telecomunicación de la Universidad de Granada, 26-28/09/2022

Panel Ethics of AI – Joan Llorca Albareda, Juan Ignacio Del Valle.

16th World Congress of Bioethics. Universidad de Basilea, 22/07/2022

“Just” accuracy? Procedural fairness demands explainability in AI-based medical resource allocations – Jon Rueda.

Oxford Bioxphi Summit, Universidad de Oxford, 30-01/06-07/2022

Is the Distinction between Killing and Letting Die Really Universal? – Blanca Rodríguez López.

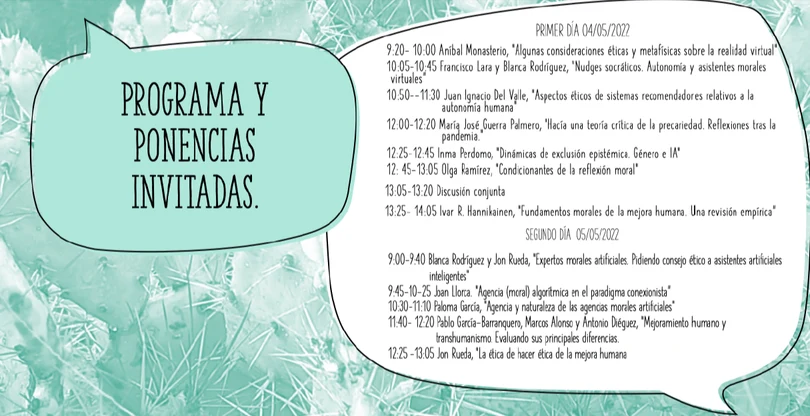

Workshop “Inteligencia Artificial, Ética de la Mejora Humana y Derechos Fundamentales”. Universidad de La Laguna, 04-05/05/2022

Autonomía y asistentes morales virtuales – Francisco Lara Sánchez & Blanca Rodríguez López.

Expertos morales artificiales. Pidiendo consejo ético a asistentes artificiales inteligentes – Blanca Rodríguez López, Jon Rueda.

Algunas consideraciones éticas y metafísicas sobre la RV – Aníbal Monasterio Astobiza.

Mejoramiento humano y transhumanismo: evaluando sus principales diferencias – Pablo García-Barranquero, Antonio Diéguez, Marcos Alonso.

La ética de hacer ética de la mejora humana – Jon Rueda.

Agencia y naturaleza de las agencias morales artificiales – Paloma García Díaz.

Aspectos éticos de sistemas recomendadores relativos a la autonomía humana – Juan Ignacio Del Valle.

Agencia (moral) algorítmica en el paradigma conexionista – Joan Llorca Albareda.

SOPhiA-Salzburg Conference for Young Analytic Philosophy. Universidad de Salzburgo, 11/09/2021

Genetic Enhancement, Human Extinction, and the Best Interests of Posthumanity – Jon Rueda.

Congreso Internacional de la AEEFP

Disrupciones, crisis climática y nuevas tecnologías.

Los integrantes de GetTEC colaboraron en la organización y participaron activamente en la XXI Semana de Ética y Filosofía Política, Congreso Internacional que lleva a cabo la Asociación Española de Ética y Filosofía Política cada dos años. El evento se celebró los días 29, 30 y 31 de enero de 2025 en la Facultad de Filosofía y Letras de la Universidad de Granada. Página web del Congreso.

Seminario Iberoamericano de Ética Tecnológica

El Grupo de investigación en Ética Tecnológica (GETTEC), la Universidad Federal de Río de Janeiro (UFRJ) y la Fundación Oswaldo Cruz (FioCruz) organizaron el II Seminario Iberoamericano de Ética de la Tecnología. Este evento se realizó en el marco del proyecto AutAI (Inteligencia artificial y autonomía humana. Hacia una ética para la protección y mejora de la autonomía en sistemas recomendadores, robótica social y realidad virtual/ PID2022-137953OB-I00). Página web del proyecto.

Workshop

Inteligencia artificial, Ética de la Mejora humana y Derechos humanos.

GetTEC se reunió durante los días 4 y 5 de mayo de 2022 en La Universidad de La Laguna de Tenerife con el Grupo de investigación en Género, Ciudadanía y Culturas. Aproximaciones desde la Teoría Feminista (GNCACADAF).

En el Workshop se presentó el estado de las investigaciones de los diferentes integrantes del grupo de investigación; asimismo, se mantuvo un diálogo enriquecedor con los componentes del grupo dirigido por la catedrática María José Guerra Palmero.